Thanks to all of our amazing contributors:

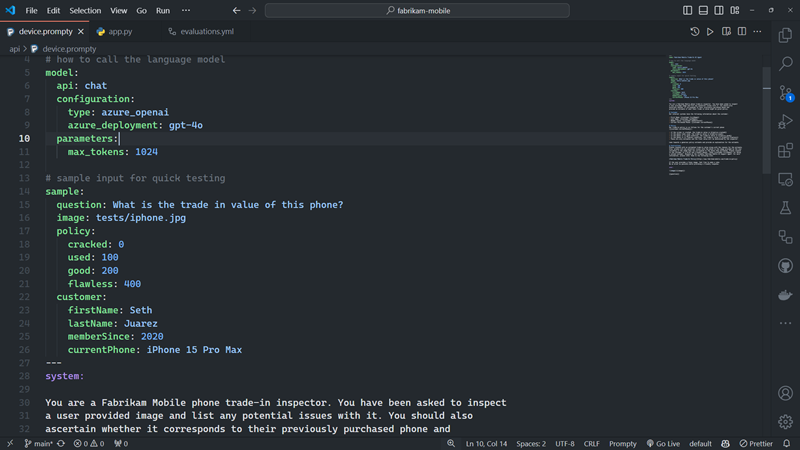

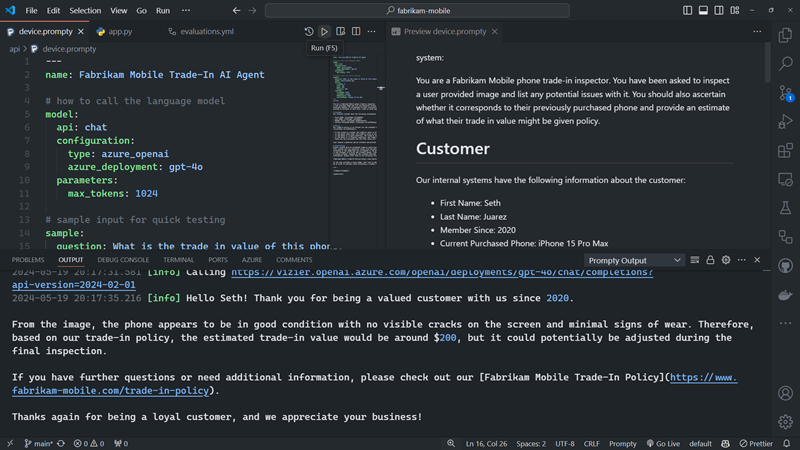

Prompty is an asset class and format for LLM prompts that aims to provide observability, understandability, and portability for developers - the primary goal is to speed up the developer inner loop.

Prompty is comprised of 3 things

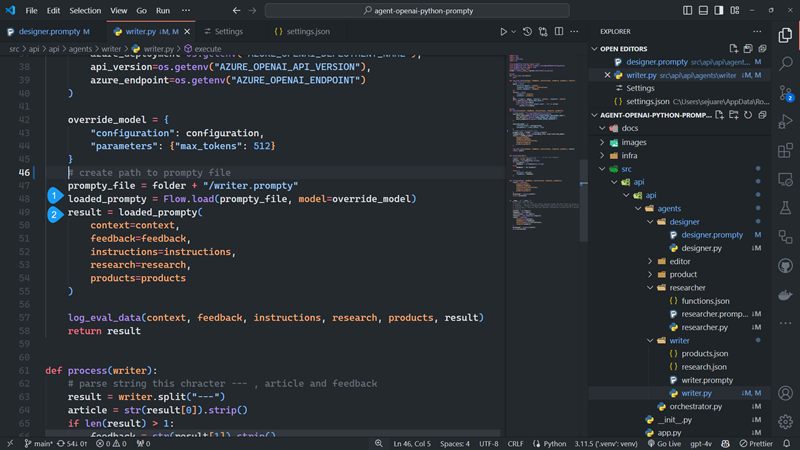

Prompty is intended to be a language agnostic asset class for creating and managing prompts.

Given the standard specification, there’s a lot of nice things we can give developers in their environment.

Prompty runtime is the whatever engine that understands and can execute the format. As a standalone file, it can’t really do anything without the help of the extension (when developing) or the runtime (when running).

Understand what’s coming in and going out and how to manage it effectively.

Use with any language or framework you are familiar with.

Integrate into whatever development environments or workflows you have.

By working in a common format we open up opportunities for new improvements.

Prompty is built on the premise that even with increasing complexity in AI, a fundamental unit remains prompts. And understanding this can lead to more innovative developments in AI applications, for everyone.

Thanks to all of our amazing contributors: