Using LangChain

This guide explains how to use Prompty templates with the LangChain framework and the langchain-prompty integration, to build generative AI applications.

What is LangChain

Section titled “What is LangChain”LangChain is a composable framework for building context-aware, reasoning applications pwoered by large language models, and using your organization’s data and APIs. It has an extensive set of integrations with both plaforms (like AzureAI) and tools (like Prompty) - allowing you to work seamlessly with a diverse set of model providers and integrations.

Explore these resources · Microsoft Integrations ·

langchain-promptydocs ·langchain-promptypackage ·langchain-promptysource

In this section, we’ll show you how to get started using Prompty with LangChain - and leave you with additional resources for self-guided exploration.

Pre-Requisites

Section titled “Pre-Requisites”We’ll use the langchain-prompty Python API with an OpenAI model. You will need:

- A development environment with Python3 (3.11+ recommended)

- A Visual Studio Code editor with Prompty Extension installed

- An OpenAI developer account with an API key you can use from code.

- Get: Create an API-KEY using OpenAI Settings

- Set: Use it as the value for an

OPENAI_API_KEYenvironment variable.

- LangChain python packages for OpenAI and Prompty:

- Use:

pip install -qU "langchain[openai]" - Use:

pip install -U langchain-prompty

- Use:

Let’s get started!

Quickstart: Hello, LangChain!

Section titled “Quickstart: Hello, LangChain!”Let’s start with a basic LangChain application example using Language models. This lets us validate the LangChain setup is working before we try using Prompty.

IMPORTANT:

-

On that page, pick Select chat model:

OpenAIin the dropdown. -

Next, install the

langchain[openai]package using this command:pip install -qU "langchain[openai]" -

Now create a new file called

hello_langchain.pyin the local directory. -

Copy over this code (adapted from the sample provided there)

import getpassimport osif not os.environ.get("OPENAI_API_KEY"):os.environ["OPENAI_API_KEY"] = getpass.getpass("Enter API key for OpenAI: ")from langchain.chat_models import init_chat_modelmodel = init_chat_model("gpt-4o-mini", model_provider="openai")# Added lines for simple testresponse = model.invoke("What can you tell me about your tents? Respond in 1 paragraph.")print(response.content) -

Run the application using this command:

python3 hello_langchain.py -

If you had not previously set the “OPENAI_API_KEY` env variable in that terminal, you will now be prompted to enter it as the “Password” input interactively.

On successful run, you should see a valid text response. Here’s some sample:

Our tents are designed for durability, comfort, and ease of setup....and

I don't sell products or own physical items, so I don't have any tents to offer.....This is a generic response to that question. What if we wanted to customize the response with our template and data? This is where Prompty can help.

Using: LangChain-Prompty

Section titled “Using: LangChain-Prompty”Let’s look at the langchain-prompty quickstart. You can also see the guidance in the package README.

-

First, install the

langchain-promptypackage:pip install -U langchain-prompty

The create_chat_prompt function creates a chat prompt from a Langchain schema and returns a Runnable object. We can then use the pipe operator to chain Runnables (input, prompt, model, output) as desired, to orchestrate the end-to-end workflow.

Let’s use this to refactor our previous example to use Prompty.

-

Create a

hello.promptyfile with a basic prompt template as shown:---name: Basic Promptdescription: A basic prompt that uses the GPT-3 chat API to answer questionsauthors:- author_1- author_2model:api: chatconfiguration:azure_deployment: gpt-4o-minisample:firstName: JanelastName: Doequestion: Tell me about your tentscontext: >The Alpine Explorer Tent boasts a detachable divider for privacy,numerous mesh windows and adjustable vents for ventilation, anda waterproof design. It even has a built-in gear loft for storingyour outdoor essentials. In short, it's a blend of privacy, comfort, and convenience, making it your second home in the heart of nature!---system:You are an AI assistant who helps people find information. As the assistant,you answer questions briefly, succinctly, and in a personable manner usingmarkdown and even add some personal flair with appropriate emojis.Also add in dad jokes related to tents and outdoors when you begin your response to {{firstName}}.# CustomerYou are helping {{firstName}} to find answers to their questions.Use their name to address them in your responses.# ContextUse the following context to provide a more personalized response to {{firstName}}:{{context}}user:{{question}} -

Create a

hello_prompty.pyfile in the same folder, with this code:import getpassimport osif not os.environ.get("OPENAI_API_KEY"):os.environ["OPENAI_API_KEY"] = getpass.getpass("Enter API key for OpenAI: ")from langchain.chat_models import init_chat_modelmodel = init_chat_model("gpt-4o-mini", model_provider="openai")from pathlib import Pathfolder = Path(__file__).parent.absolute().as_posix()from langchain_prompty import create_chat_promptprompt = create_chat_prompt(folder + "/hello.prompty")from langchain_core.output_parsers import StrOutputParserparser = StrOutputParser()chain = prompt | model | parserresponse =chain.invoke({"input":'''{"question": "Tell me about your tents", "firstName": "Jane", "lastName": "Doe"}'''})print(response) -

Run the application above from the command line.

python3 hello_prompty.py -

You should see a response that looks something like this:

Hey Jane! 🌼 Did you hear about the guy who invented Lifesavers? He made a mint!Now, as for tents, if you mean camping tents, they typically come in various shapes and sizes, including dome, tunnel and pop-up styles! They can be waterproof, have multiple rooms, or even be super easy to set up. What kind of adventure are you planning? 🏕️

Compare the code to the previous version - do you see the changes that help you move from inline question to a prompt template? You can now modify the Prompty template and re-run the script to iterate quickly on your prototype.

Using: VS Code Extension

Section titled “Using: VS Code Extension”The Prompty Visual Studio Code extension helps you manage the creation and execution of Prompty assets within your IDE, helping speed up the inner loop for your development workflow. Read the Prompty Extension Guide for a more detailed explainer on how the create your first prompty.

In this section, we’ll focus on using the extension to convert the .prompty file to Langchain code, and get a running application out of the box, for customization later.

-

Make sure you have already completed the previous steps of this tutorial. This ensures that your VS Code environment has the required Langchain python packages installed.

-

Create a new

basic.promptyfile by using the Visual Studio Code extension New Prompty option in the dropdown menu. You should see something like this:---name: ExamplePromptdescription: A prompt that uses context to ground an incoming questionauthors:- Seth Juarezmodel:api: chatconfiguration:type: azure_openaiazure_endpoint: ${env:AZURE_OPENAI_ENDPOINT}azure_deployment: <your-deployment>api_version: 2024-07-01-previewparameters:max_tokens: 3000sample:firstName: Sethcontext: >The Alpine Explorer Tent boasts a detachable divider for privacy,numerous mesh windows and adjustable vents for ventilation, anda waterproof design. It even has a built-in gear loft for storingyour outdoor essentials. In short, it's a blend of privacy, comfort,and convenience, making it your second home in the heart of nature!question: What can you tell me about your tents?---system:You are an AI assistant who helps people find information. As the assistant,you answer questions briefly, succinctly, and in a personable manner usingmarkdown and even add some personal flair with appropriate emojis.# CustomerYou are helping {{firstName}} to find answers to their questions.Use their name to address them in your responses.# ContextUse the following context to provide a more personalized response to {{firstName}}:{{context}}user:{{question}} -

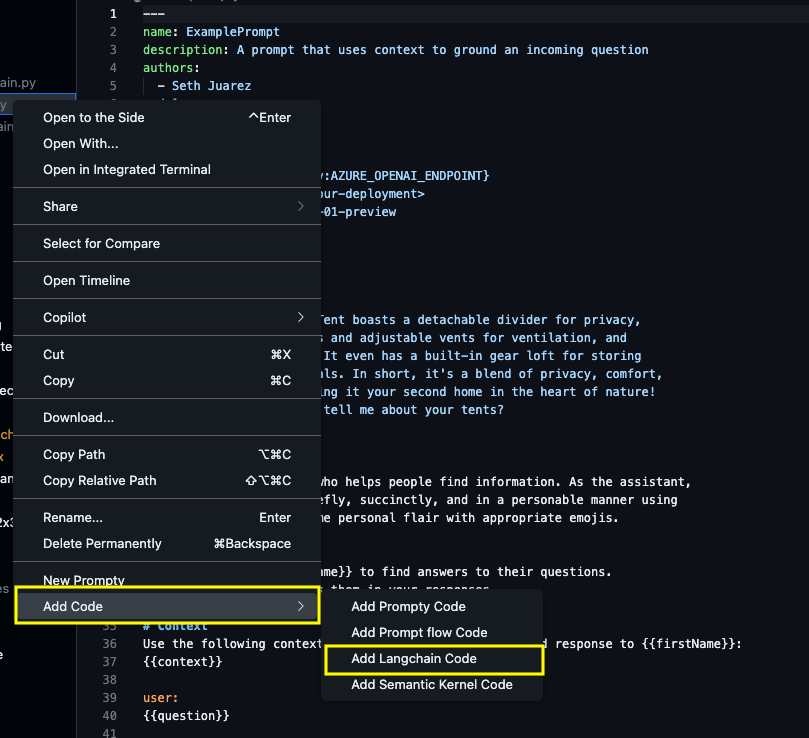

Select that

basic.promptyfile in your VS Code File Explorer and click for the drop-down menu. You should now see options toAdd Codeas shown below.

-

Select the

Add Langchain Codeoption. You should now see abasic_langchain.pyfile created for you in the local folder. It should look something like this:import getpassimport osimport jsonfrom langchain_core.output_parsers import StrOutputParserfrom langchain_core.prompts import ChatPromptTemplatefrom langchain_openai import ChatOpenAI# pip install langchain-promptyfrom langchain_prompty import create_chat_promptfrom pathlib import Path# load prompty as langchain ChatPromptTemplate# Important Note: Langchain only support mustache templating. Add# template: mustache# to your prompty and use mustache syntax.folder = Path(__file__).parent.absolute().as_posix()path_to_prompty = folder + "/basic.prompty"prompt = create_chat_prompt(path_to_prompty)os.environ["OPENAI_API_KEY"] = getpass.getpass()model = ChatOpenAI(model="gpt-4")output_parser = StrOutputParser()chain = prompt | model | output_parserjson_input = '''{"firstName": "Seth","context": "The Alpine Explorer Tent boasts a detachable divider for privacy, numerous mesh windows and adjustable vents for ventilation, and a waterproof design. It even has a built-in gear loft for storing your outdoor essentials. In short, it's a blend of privacy, comfort, and convenience, making it your second home in the heart of nature!\\n","question": "What can you tell me about your tents?"}'''args = json.loads(json_input)result = chain.invoke(args)print(result) -

Run the file using the command:

python basic_langchain.py -

You should see a valid response like this:

Hello Seth! 👋Our key tent offering at the moment is the Alpine Explorer Tent. It's quite the ultimate companion for your outdoor adventures! 🏕️Here's a quick rundown of its features:- It has a **detachable divider**, offering the option for privacy when needed. Perfect when camping with friends or family.- Numerous **mesh windows and adjustable vents** help ensure ventilation, keeping the interior fresh and cool. No need to worry about stuffiness!- The Alpine Explorer Tent is **waterproof**, so you're covered, literally and figuratively, when those unexpected rain showers happen.- It even has a **built-in gear loft** for storing your outdoor essentials. So you won't be fumbling around in the dark for your torchlight or that bag of marshmallows!In short, our tent is designed to provide a blend of privacy, comfort, and convenience, making it your second home in the heart of nature! 🏞️😊Is there anything specific you want to know about it, Seth?

Observe the following

- The code is similar to the previous step, with minor differences in setting the default model and input arguments.

- The prompty asset includes default

context, providing grounding for the response. This example shows how to extend the sample for retrieval augmented generation with inputs from other service integrations. - The process demonstrates how parameters are overridden from asset to runtime. The asset specifies defaults for the model configuration (

azure_openai), but the execution chain uses a different model (openai), which takes precedence.

For Reference - the prompty and python files described in this tutorial are also available as file assets under the

web/docs/assets/codefolder in the codebase.>

Next Steps

Section titled “Next Steps”Congratulations! You just learned how to use Prompty with LangChain to orchestrate more complex workflows! For your next steps, try one of the following:

- Take the examples from the previous steps and update them to use a different model provider or configuration. For instance, select a different chat model from the drop-down and use that as your starter code for loading the Prompty asset. What did you observe?

- Explore the How-to guides for other examples that extend the capabilities further. For instance, explore streaming chat (with history) or understand how to chain other prompts to create more complex workflows.

Want to Contribute To the Project? - Updated Guidance Coming Soon.