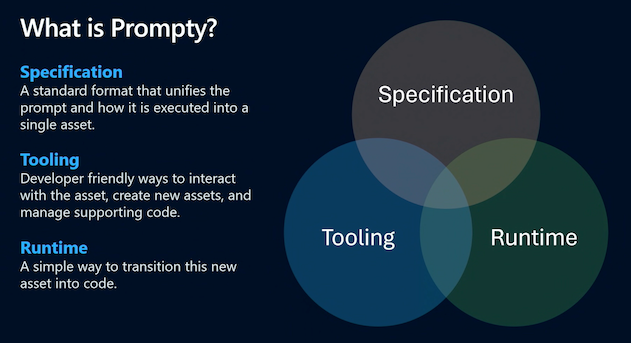

In this section, we cover the core building blocks of Prompty (specification, tooling, and runtime) and walk you through the developer flow and mindset for going from "prompt" to "prototype".

1. Prompty Components

The Prompty implementation consists of three core components - the specification (file format), the tooling (developer experience) and the runtime (executable code). Let's review these briefly.

1.1 The Prompty Specification

The Prompty specification defines the core .prompty asset file format. We'll look at this in more detail in the Prompty File Spec section of the documentation. For now, click to expand the section below to see a basic.prompty sample and get an intuitive sense for what an asset file looks like.

Learn More: The basic.prompty asset file

---

name: Basic Prompt

description: A basic prompt that uses the GPT-3 chat API to answer questions

authors:

- sethjuarez

- jietong

model:

api: chat

configuration:

api_version: 2023-12-01-preview

azure_endpoint: ${env:AZURE_OPENAI_ENDPOINT}

azure_deployment: ${env:AZURE_OPENAI_DEPLOYMENT:gpt-35-turbo}

sample:

firstName: Jane

lastName: Doe

question: What is the meaning of life?

---

system:

You are an AI assistant who helps people find information.

As the assistant, you answer questions briefly, succinctly,

and in a personable manner using markdown and even add some personal flair with appropriate emojis.

# Customer

You are helping {{firstName}} {{lastName}} to find answers to their questions.

Use their name to address them in your responses.

user:

{{question}}1.2 The Prompty Tooling

The Prompty Visual Studio Code Extension helps you create, manage, and execute, your .prompty assets - effectively giving you a playground right in your editor, to streamline your prompt engineering workflow and speed up your prototype iterations. We'll get hands-on experience with this in the Tutorials section. For now, click to expand the section and get an intutive sense for how this enhances your developer experience.

Learn More: The Prompty Visual Studio Code Extension

- Install the extension in your Visual Studio Code environment to get the following features out-of-the-box:

- Create a default

basic.promptystarter asset - then configure models and customize content. - Create the "pre-configured" starter assets for GitHub Marketplace Models in serverless mode.

- Create "starter code" from the asset for popular frameworks (e.g., LangChain)

- Use the prompty commandline tool to execute a

.promptyasset and "chat" with your model. - Use settings to create named model configurations for reuse

- Use toolbar icon to view and switch quickly between named configurations

- View the "runs" history, and drill down into a run with a built-in trace viewer.

1.3 The Prompty Runtime

The Prompty Runtime helps you make the transition from static asset (.prompty file) to executable code (using a preferred language and framework) that you can test interactively from the commandline, and integrate seamlessly into end-to-end development workflows for automation. We'll have a dedicated documentation on this soon. In the meantime, click to expand the section below to learn about supported runtimes today, and check back for updates on new runtime releases.

Learn More: Available Prompty Runtimes

Core runtimes provide the base package needed to run the Prompty asset with code. Prompty currently has two core runtimes, with more support coming.

- Prompty Core (python) → Available in preview.

- Prompty Core (csharp) → In active development.

Enhanced runtimes add support for orchestration frameworks, enabling complex workflows with Prompty assets:

- Prompt flow → Python core

- LangChain (python) → Python core (experimental)

- Semantic Kernel → C# core

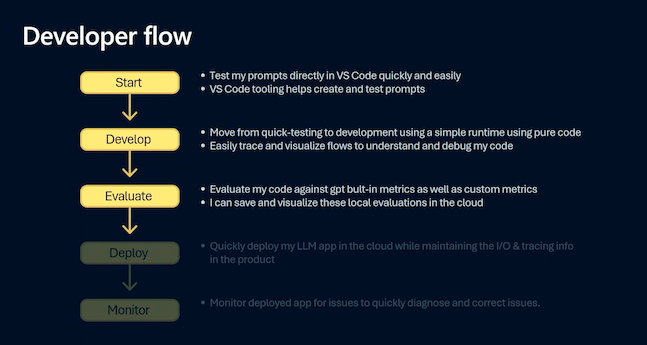

2. Developer Workflow

Prompty is ideal for rapid prototyping and iteration of a new generative AI application, using rich developer tooling and a local development runtime. It fits best into the ideation and evaluation phases of the GenAIOps application lifecycle as shown:

- Start by creating & testing a simple prompt in VS Code

- Develop by iterating config & content, use tracing to debug

- Evaluate prompts with AI assistance, saved locally or to cloud

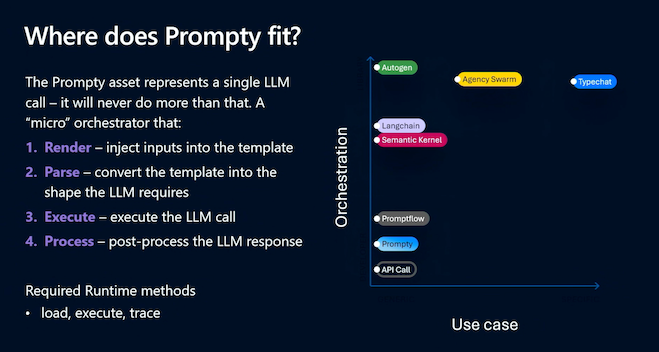

3. Developer Mindset

As an AI application developer, you are likely already using a number of tools and frameworks to enhance your developer experience. So, where does Prompty fit into you developer toolchain?

Think of it as a micro-orchestrator focused on a single LLM invocation putting it at a step above the basic API call and positioned to support more complex orchestration frameworks above it. With Prompty, you can:

- configure the right model for that specific invocation

- engineer the prompt (system, user, context, instructions) for that request

- shape the data used to "render" the template on execution by the runtime

Want to Contribute To the Project? - Updated Guidance Coming Soon.